Throughout most of 2022 I helped Everyday Robots (a spinoff from Google X) develop their approach to sound design, character and choreography. I developed invention frameworks; composed a range of sound palettes for the robots; built software and hardware prototyping rigs to help us test them out; and helped the in-house team frame and communicate the work around the company.

Framing and mapping

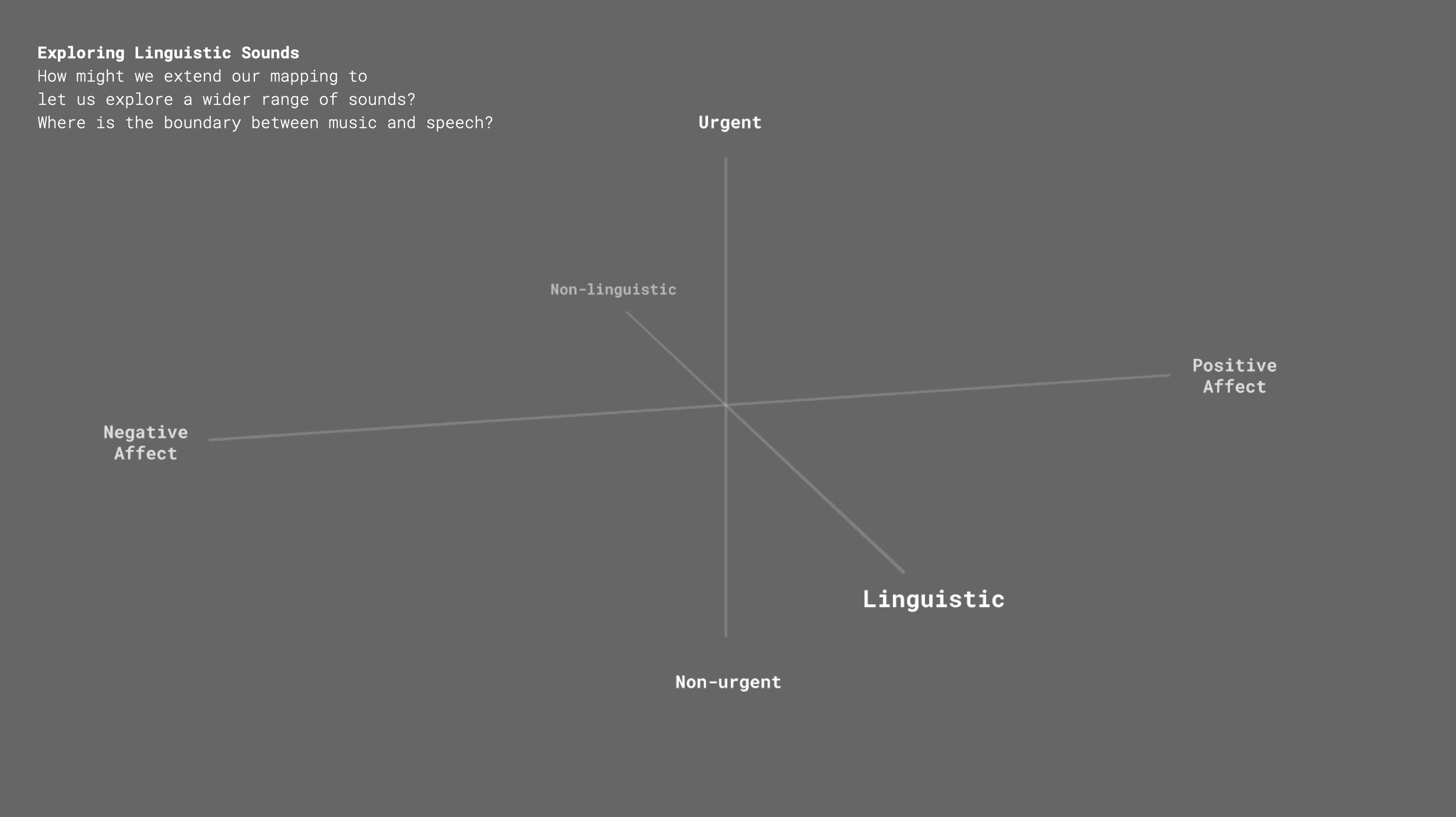

I typically start jobs like this with some quick maps and diagrams – just enough to help us start making a useful range of sketch prototypes. What do these robots need to communicate, how might they do it, and in what contexts will it happen? I landed on three axes to plot our ideas on:

• Urgency i.e. from information and instruction to warnings and alerts.

• Affect i.e. from ‘everything is great’ to ‘something bad happened’.

• Non-verbal/Verbal i.e. from beeps and tones to full speech.

Sound design for non-verbal communication

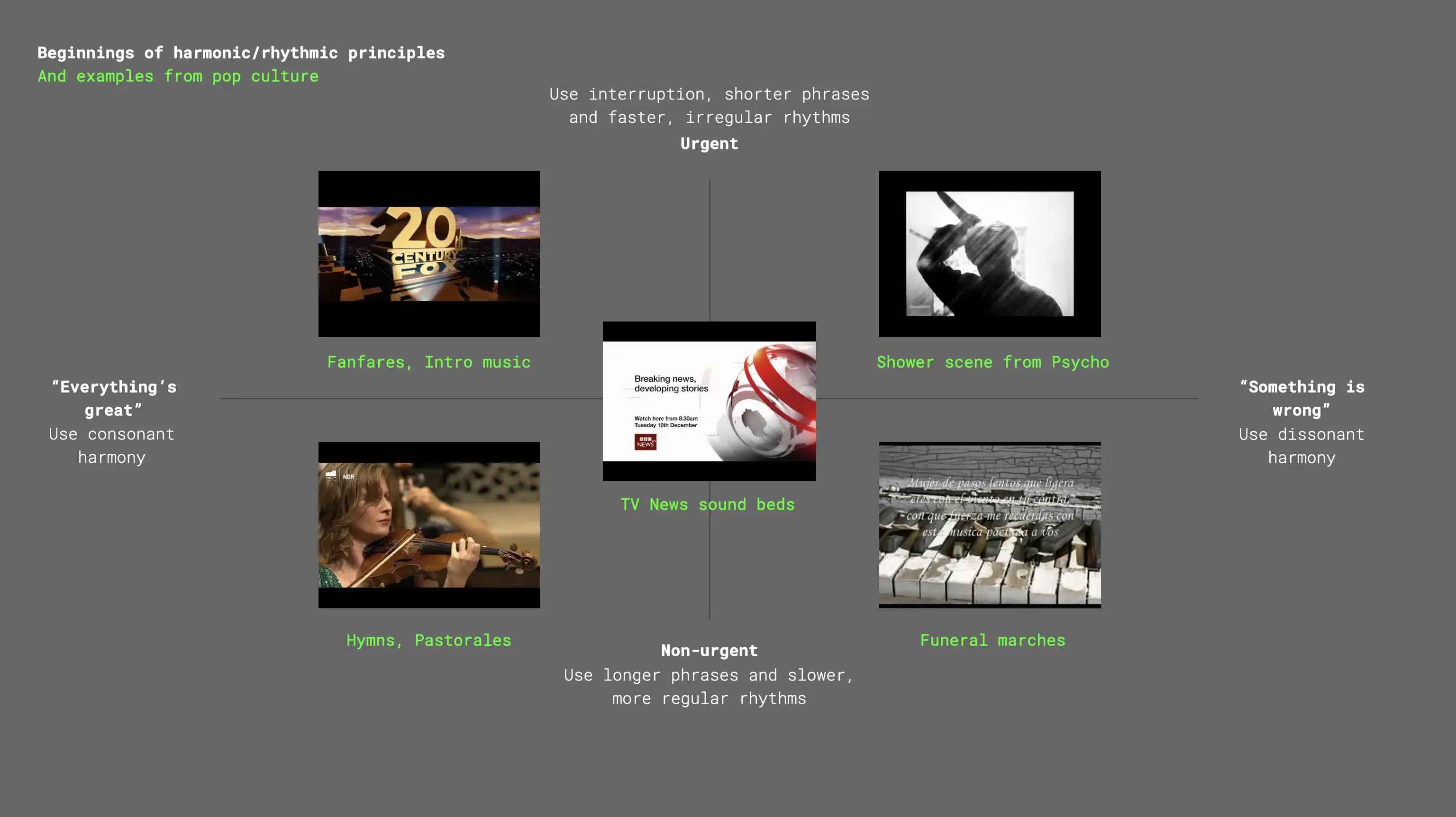

It’s easy to assume we’ll mostly interact with robots by talking to them, but there are a huge number of cases where this won’t be appropriate. Can we help people understand – non-verbally – when a robot is working, thinking, navigating, or has a low battery, or no signal? I started by sketching out a cognitive scaffolding that taps into familiar sonic conventions from film and TV.

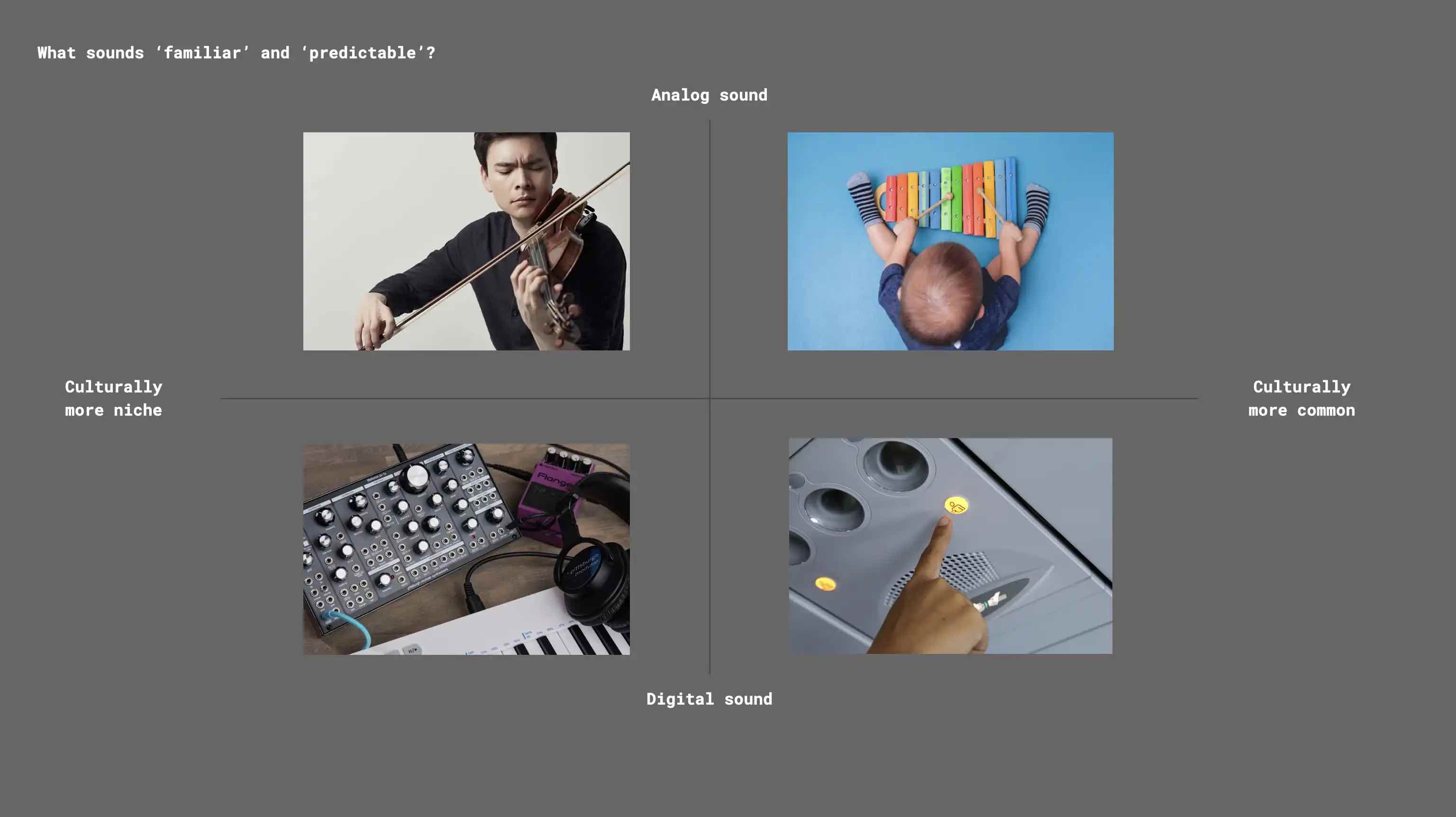

I did the same with timbre, creating a framework to reference culturally common sounds (e.g. from childhood xylophones or subway cars), or more rarefied, niche ones (like futuristic synths or classy violins).

With this scaffolding in place, I jumped into Logic and composed a set of common signals that could be styled in different ways, from simple sine wave tones through to sci-fi vocaloid utterances. Here’s a few of them…

Shutdown

Charging

Moving

Hello

Listening

Thinking

Understood

Too Close

Lost

Sketching in code

After sketching a load of variations of sounds, I built a simulator in Unity which let us test them out in different environmental contexts. I layered in ambient noise from kitchens, offices, and meeting rooms, and added the ability to listen from different distances (e.g. ‘up close while typing’, to ‘further away in a noisy cafe’). Here it is in manual mode, where you can drive the robot around, triggering different states…

… and here’s ‘Scene’ mode, which let us storyboard and animate start-to-finish moments like startup, shutdown; scanning; thinking; wifi disconnected; and so on.

I also spent some time sampling all the motors and fans inside each robot, which let me design sounds in tune with them. Turns out these guys liked whirring around in the key of E.

Physical prototyping

After we had a few sets of software sketches, I built some physical rigs to let us try out sounds in real environments. This one had a 2 channel setup, with a bassier speaker for the body, and a directional tweeter up top that could rotate like a head. The fancy Genelec speakers let us simulate a huge range of audio quality, which always helps when writing specs for the real version.

Sketch prototyping as strategy

This type of work, whether it be for megacorps, startups, academia or cultural orgs, is all about reducing risk and revealing opportunities. Sketch prototyping in software and hardware helps us literally get a feel for ideas quickly and cheaply before needing to commit to specifications or requirements documents, and can also help slingshot strategy into new, unexplored areas. As the saying goes, Making Is Thinking.